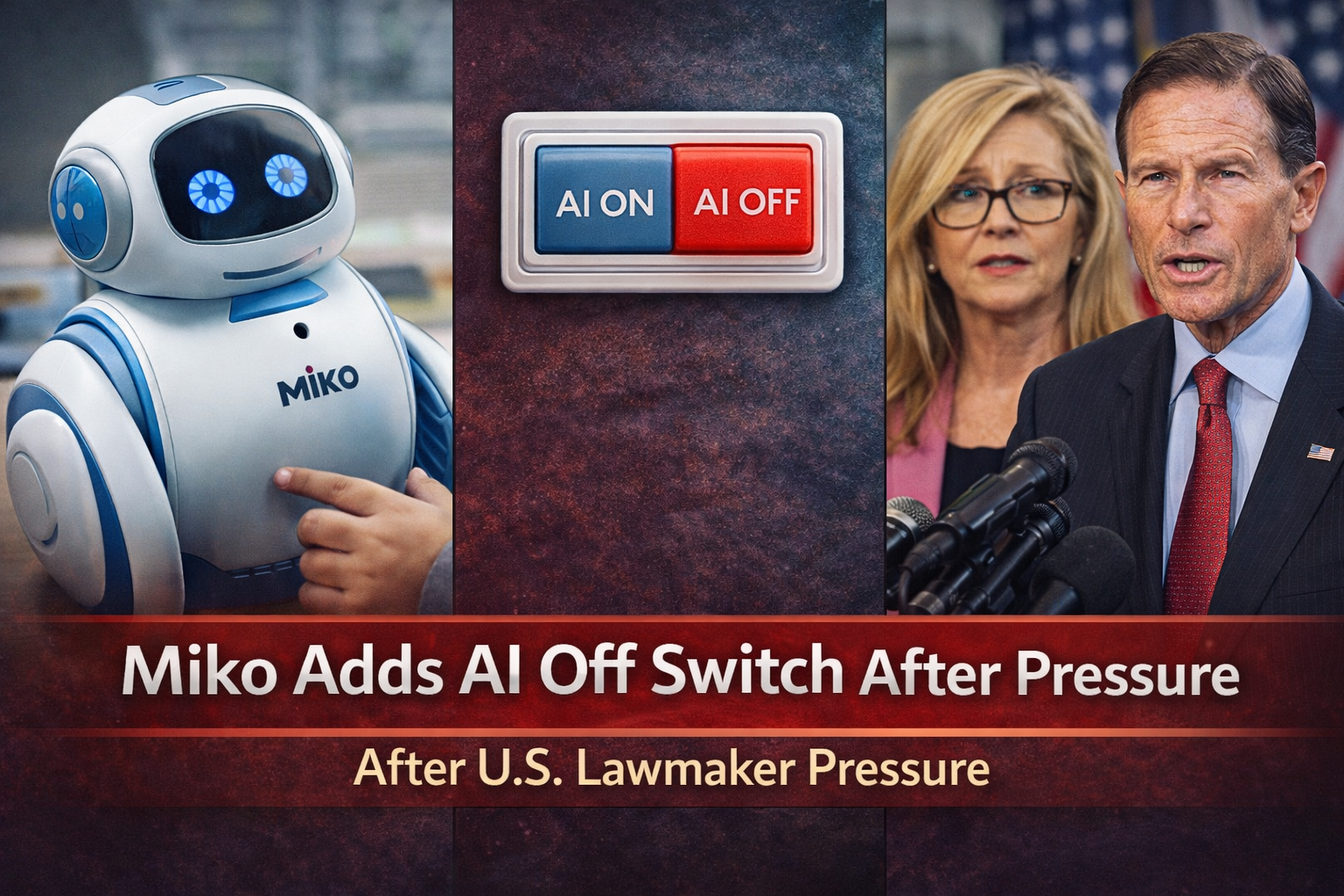

The last several years have seen a rise in AI-powered toys, which provide children with interactive learning, storytelling, and conversations. Nonetheless, the issue of privacy and data security has been increasing. The most recent change, however, is announced by Miko, a company that specializes in smart children’s robot toys: the company is equipping its products with an AI on-off switch, after being pressured by authorities in the United States.

The action follows the concerns of the U.S. lawmakers regarding the manner in which the interaction of children with the AI toys is managed, stored, and safeguarded. This debate has created a larger debate concerning whether artificial intelligence in products made specifically to be used by the youth is safe.

What Miko Toys Do

Miko is the manufacturer of interactive robot toys, such as the Miko 3, which is meant to serve as a learning partner for children. These robots are capable of conversation, questions and answers, stories, games and help in learning. The toys are equipped with artificial intelligence that allows them to react in real time, resulting in natural and personal interactions.

Since these robots can directly communicate with children based on the advanced AI models, they gather and process them on conversational data to produce responses. Although the company has expressed that it does not keep any sensitive personal information, even the very concept of Artificial Intelligence directly addressing children has triggered meaningful safety concerns.

Political Pressure From Lawmakers

The move to implement the AI off switch was made after U.S. senators such as Marsha Blackburn and Richard Blumenthal criticized the move. That also brought concern to the lawmakers when reports indicated that one of the databases that was attached to the AI system of Miko might have been openly available online.

The reports used by the senators show that thousands of AI-created responses to the interactions of children were supposedly leaked through an unprotected database. Although Miko refuted that voice recordings or sensitive personal information had been leaked, the incident provoked a very strong response among legislators who claimed that the privacy of children should be ensured at all costs.

The senators were being publicly inquisitive about the decision to allow AI toys to function without tougher regulations and supervision. They pressured the company to enhance parental controls and make them more effective.

Introduction of the AI On-Off Switch

Miko replied that it would add a parental control feature to the toy, which would disable the conversational AI on the toy. It implies that parents have the ability to switch off the open-ended AI conversations. Even without the AI switch on, the robot can do some functions as playing preloaded games, telling programmed stories, and providing some basic educational activities. But it will cease to produce live responses (AI-based) in a conversation.

Notably, Miko also explained that conversational AI will be the default in future devices. Parents will be required to enable the feature in case they wish their child to use it. This voluntary method provides the families with more control over the way the technology is utilized at home.

Privacy and Safety Debate

The event has added a new twist to the larger problem of AI safety in children’s products. AI systems are dependent on the processing of data to work. In the case of toys that are used by children, the question must be as to how the data is stored, who has access to it and how well it is secured.

Child safety advocates claim that companies are supposed to adhere to the strict standards in designing AI systems for minors. When children talk to smart devices, they might unwillingly provide personal information. Such information may end up being abused or leaked unless there is good protection. Conversely, technology companies claim that AI-based learning technologies can bring authentic learning value to the case if they are handled correctly. Interactive robots such as Miko can be used to assist children in becoming curious, practicing language, and problem-solving.

Miko’s Official Position

Miko has stated that it takes privacy and safety seriously. The company emphasized that it does not store children’s voice recordings and that it uses security systems to protect its platforms. According to company representatives, the AI off switch is part of a broader effort to strengthen parental control features.

Miko also explained that the exposed database reportedly contained AI-generated responses rather than identifiable personal data. However, critics argue that even AI-generated content connected to children should be handled with maximum caution.

By adding the new toggle feature, Miko aims to rebuild trust and show that it is listening to concerns from both lawmakers and parents.

Industry-Wide Implications

The Miko controversy reflects a larger challenge facing the technology industry. As artificial intelligence becomes more advanced and more common in consumer products, regulation often struggles to keep pace. Many AI-powered devices are introduced to the market before clear government guidelines are established. Currently, there are limited specific laws in the United States directly regulating AI in children’s toys. Existing child privacy laws apply to online data collection, but AI-driven conversations represent a newer and more complex challenge.

Experts believe this situation could push lawmakers to consider clearer rules for AI products targeted at children. Stronger regulations could include mandatory parental controls, stricter data storage requirements, and greater transparency from companies.

Public Reaction

Parents have also reacted in varied ways. Other users are happy to have the new AI off switch as it offers the required control and confidence. Some other people think that such an element should have existed initially. The question has also attracted debate among educators and child development specialists. Others view AI learning technology as a useful tool when under supervision, and others fear that students will be over-exposed to technology at an early age.

The argument brings out a key question of the extent to which AI interaction should be provided to children. With AI increasingly penetrating the lives of people, families are facing the need to make decisions regarding the use of technology sooner than ever.

Broader Questions About AI and Trust

The editorial on the Miko case demonstrates a turning point in the history of consumer AI. Belief is a key factor in technology adoption. That trust should be greater when the products are children-oriented. Firms implementing AI in homes should be aware that innovation will not be sufficient. Clear communication, transparency and security are also crucial. People may be anxious when it comes to AI, so the quick response and noticeable advancements, such as the off button, can alleviate the anxiety of the population.But, the establishment of long-term trust will not rely solely on the policies of the company but rather on the enhanced industry standards and, possibly, the new regulations.

With the further expansion of AI to everyday products, the debate on safety, privacy, and ethical applications will not become any less vocal. Introducing an AI off button by Miko could be one of the small steps towards solving those issues, but it also marks the start of the more significant conversation of how artificial intelligence should engage with children in the digital era.